Project Overview

The Quality Assessment in Science Professional Development (QAS PD) project is a joint research endeavor between researchers and teacher educators at the University of Notre Dame and Stanford University investigating teachers' conceptions and practices across a year-long intervention using portfolios of assessment and data-use artifacts. This work builds on previous iterations of QAS Notebook studies in collaboration with Felipe Martinez (PI) at UCLA and Brian Stecher (Co-PI) at RAND Corporation, funded in part by the Spencer Foundation and the W.T. Grant Foundation.

In this current studies, we use the Notebook as a means for professional development. Teams of middle school science teachers from four schools in two states were trained to reflect on artifacts in these portfolios - assessment tasks, student samples, and annotations of the artifacts - in light of nine dimensions of effective practice. Drawn from the literature, the nine Dimensions used during the PD and the professional learning communities (PLCs) include:

Dimension 1: Setting Clear Learning Goals

Dimension 2: Aligning Assessments to Goals

Dimension 3: Assessing Frequently

Dimension 4: Varying Assessment

Dimension 5: Assessing with Appropriate Cognitive Complexity

Dimension 6: Reflecting Math/Science Practices

Dimension 7: Involving Students in Own Assessment

Dimension 8: Providing Specific Feedback

Dimension 9: Using Evidence of Student Thinking to Adapt Instruction

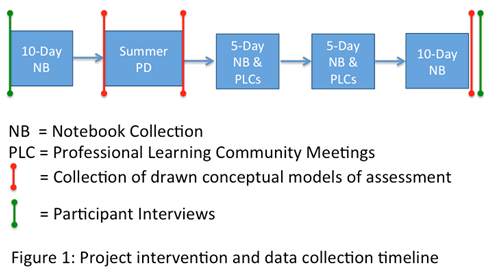

Participating teachers collected an initial 10-day Notebook prior to an intensive professional development where they were introduced to and used the Dimensions to reflect on their own practice (Figure 1). During the school year, teachers met in PLCs on a monthly basis, collecting shorter 5-day Notebooks and reflecting on specific Dimensions. A final Notebook was collected one year after the initial baseline collection. Notebooks were analyzed, blind to participant and time, by the research team.

Initial Findings

Emerging results show that, on average, teachers' conceptions of assessment and data-use practice shifted from focusing on isolated types of assessments to conceptual frameworks more reflective of the nine dimensions right after the PD. Nine months later, their conceptions reflected fewer dimensions, but were more likely to incorporate feedback loops in which evidence of student thinking was used to inform instruction beyond mere reteaching. Qualitative and quantitative analyses of participants' practice captured in the baseline and final portfolios indicated that the dimensions related to teachers helping students close the gap between their current understanding and the learning goal improved over time only if teachers already showed proficiency in dimensions like 'setting clear learning goals' and 'developing assessments with appropriate cognitive complexity'. If not, even teachers whose conceptions - as articulated in interviews and drawn conceptual maps - reflected explicit ideas about using data to inform instruction showed few changes in their practice in effective data-based decision making. These findings reveal cases of both professional growth in assessment and data-use practice as well as a possible developmental trajectory for improving in specific dimensions of practice.